Choosing a process mining tool

Process mining is the “hot” topic in our industry these days, and there are a sizable number of process mining tool vendors available in this space. However, the technology is still at the beginning of the hype curve and not a lot of organizations have established process mining as a standard tool in their process analysis toolkit.

In my opinion, it will take a few years for standardization, during which, you will see some market consolidation. We’ve already seen some high-profile M&A in process mining, and I assume that in a few years you will have a smaller number of tool vendors to choose from. But until then, you will also see more innovation and the creation of ecosystems around a tool that will be beneficial for an organization looking for a “future-proof” solution. Most likely you will see those coming from larger organizations that are not necessarily process mining pure players.

What is process mining and how does it work?

Process mining is a relatively old concept and early commercial tools were available as far back as 2001 (Software AG’s Process Performance Manager), but that was too early for organizations to accept into practice – they were busy in implementing ERP systems and standardizing their processes along the lines of their chosen ERP system.

Over the last few years, there has been a renaissance in process mining tools, and a lively group of competitors has developed.

But how does process mining work? In a very simplified way, a process mining tool takes event logs from runtime systems and looks for steps that can be combined into cases, leveraging common identifiers as the common key (usually something like “order number”).

The tool now takes the different instances and “discovers” the sequence of the steps including additional data, such as frequencies, times, etc. When it has discovered different case types, it can combine the results and display a visualization of the process in the tool.

Disclaimer: the screenshots and conceptual graphics in this article show Software AG’s process mining tool (aris-process-mining.com). However, all process mining tools work in a similar fashion.

What are features to look for?

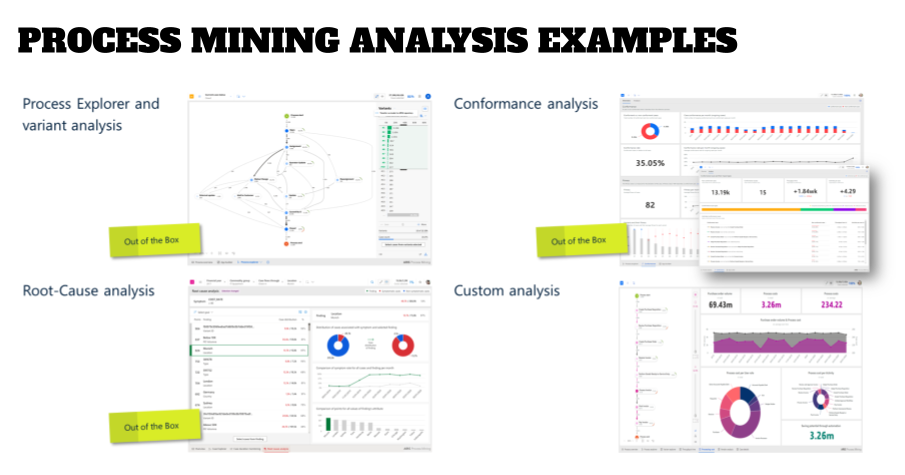

Process mining allows for powerful analysis and platform features provide that host of various out-of-the-box visualizations and configurable KPIs / metrics on dashboards and in reports.

But what should you look for in detail (as of early 2022)?

- The Process Explorer view (in the upper left hand corner of the graphic above) is most likely what new users expect from a process mining tool. It shows all the paths that a process instance went through and displays various measures, such as frequencies (for cases and activities), times (for each step and the transition from step A to step B), or any other measure that you might have collected in your data set – things like cost or vendors, for example.

This allows you to drill down to the finest details in a graphical interface, but it also comes with the need to develop skills on how to “read the data”. For that you should also have a base understanding of statistics, so that you don’t get deceived by “an obvious observation” that is more an artifact of your metric selection (e.g., mean vs. average in times calculation, and the size of your selected sample vs. the overall case population).

Keep in mind that causes for ‘not-so-stellar process performance’ are not one-dimensional, which means you want to have a combination of measures and drill downs in mind when doing your analysis. - Variant analysis is another crucial aspect for a process mining tool. As you can see above, it should be easy to select one or more variants, so that you can see what is going on outside of the “happy path”. Ideally, you can export the discovered process variants into your architecture tool, so that you can do further analysis and solution design for a future transition state implementation (see the Solution Lifecycle article for more details on the bigger picture).

- Once you find anomalies in your process execution and measurement, such as long processing times, the tool should you support you in root cause analysis with a root-cause-miner. That feature automatically goes through the data set for this specific anomaly and allows you to see which aspect is causing the observation – does this happen with a specific vendor, for example, and you can detect a pattern. Or do things always happen at a specific location or with a specific user group?

- If you still cannot completely understand the reason for the anomaly, you might want to have a look at task mining capabilities. Some tools have this built in, but in most of the cases, this is a feature of Robotic Process Automation tools, and might be named “Robotic Process Discovery” (RPD).

This technology works differently than process mining by deploying robots that record screen activities, so that you can see if, for example, a user just don’t know where to click and needs more training, or if information is missing (“that vendor always forget to add the PO reference”), or if a technical interface does not work correctly and the end users mitigate this by being the “swivel chair interface” and type in data from another system manually.

Another use case for task mining is the use of tools that don’t produce even data, such as local desktop apps (like Excel). Task mining can capture these local activities and then provide an aggregated step as input for the process mining tool and complete the process.

Task mining, however, is a much broader topic that is outside of the scope of this article. If you want to learn more about it, I recommend giving episode 9 of the podcast a listen.

- Conformance analysis is another aspect you want to look at – to which degree do users execute the process as it was designed? A good tool will allow you to upload a BPMN reference process, and parse the data to see which instances/variants follow the reference process. Overview dashboards will then show you the degree of compliance, either as an absolute number or over time. More advanced tools will also show you the “fitness value” which is the compliance calculation, not in a black and white view, but looking at the degree of compliance for each variant (“almost compliant except this” vs. “absolutely not compliant”).

The more practical aspect will be the detailed analysis of which conformance issues came up. Things like wrong start events (how many, how long took a case when it started at the wrong step?), unplanned end events, mix-up of sequences (causing rework), or the identification of steps in the executed process that are not part of your reference process model. Those would have to be added, or the process changed in the implementation so that there is no need to do these steps anymore.

A view like this can trigger immediate process improvement activities and act as a punch list. - Custom dashboards will help you to investigate your data set to see if a hypothesis that you made will be supported by the data. This could be for example dashboards that show industry-specific KPIs, such as OTIF (on time, in full) deliveries in a supply chain scenario.

Custom dashboards also allow you to create overviews down to the individual case level, so that you can pinpoint exactly which process instance went wrong and at what step. - In-tool calculations: your data set includes raw data, which might have to be calculated to show, for example, if the segregation of duties has been observed. The tool should have a visual interface (no coding) for creating calculated columns that then can be used as a data source for a dashboard component.

An example of this is “if PO creator is equal to PO approver, then separation of duty is not observed” and “if PO creator is not equal to PO approver, then separation of duty is observed”. Both outcomes can then be displayed in a chart component on the dashboard.

Since these types of data model adaptations are done by business analysts, a graphical user interface for creating the if/then/else statements, with options to nest these, is crucial. - Data transformation is the activity that takes the raw source tables and transforms the fields into the format needed for the process mining tool. Typically this is done via SQL-like statements (“join”, “rename”, and so on). Since this is an “engineer” type of role who will do this, basic coding skills and data modeling skills are helpful for doing this activity.

- Integrations – this is a larger topic and the next chapter will cover the four integration types in more detail.

- Export and import of “solutions” – pre-packaged analysis that already exists (for example provided by consulting organizations) and can be imported easily and will give you pre-built dashboards and instructions/templates for the data collection.

Integrations

By now it is clear that your process mining tool must integrate with other systems. However, there are four different aspects you should look at when evaluating integrations.

Data import from runtime systems

Typically the default import of execution data is via a CSV file. This obviously has advantages and disadvantages – the advantage is that you don’t have to build an integration with the source system and you can rely on reports from the source system. The disadvantage is that the data is not “live” and you might have to transform the source data outside of the process mining tool into the format that the process mining tool can process (see above).

You might want to consider a live integration, and for this you typically see two options:

- Dedicated extraction adapters for source systems or generic adapters, such as a JDBC adapter that can be used on any database supporting this protocol. For obvious reasons, this approach has its limitations (there are too many potential source systems out there), but you should look for support for major business applications.

- The use of an integration tool. Integration tools typically have more adapters available (in the hundreds or even more), and they also allow the development of custom interfaces. While this might help connect a lot more systems, it also comes with more complexity and the potential need for coding skills.

In the graphic below all three import scenarios are depicted using Software AG’s tool stack.

Integration with a Task Mining tool

Some process mining tools have task mining capabilities already built in. In case that your chosen tool does not have this, you should look for a possibility to integrate task mining results into your process analysis (see some example use cases above).

Integration with your architecture tool

Process mining is an excellent tool to get a deep understanding of your as-is processes and, depending on your data set, related aspects such as cost or compliance. However, it is not meant to create a future state design, and it is limited to the underlying data set.

For these aspects you want a proper architecture tool that allows you to do analysis or simulation, even if the future state process is completely different than the discovered as-is. In addition, your architecture tool should support the full solution lifecycle, which means it needs to be capable of containing your Enterprise Baseline to resolve the situation where “what was designed” does not match “what was implemented” (the potential discrepancy between the solution design and implementation phases), for example.

Therefore you want two integrations:

- Upload of reference processes from your design platform into the process mining tool (see above).

- Download of discovered processes from process mining to the design platform. This feature allows you to skip as-is workshops with stakeholders and SMEs and will produce a baseline model that is based on the actual process execution.

An added benefit of the second feature is that you can use a “real” Simulation engine that allows you to set parameters for your future-state process that might be completely different than your as-is data.

Using process mining analysis results to trigger actions

The last integration that you are looking for is the ability to trigger actions based on process mining results, such as process instances or steps that run too long. You should be able to define the conditions that will trigger the action -times, volumes, values- and then provide a web hook or native integration with a workflow component. This could be either built into the process mining tool, or trigger an integration engine that you might already have in place (in the graphic above it would be the webMethods.io tool). Potential actions could be notifications and alerting, or the execution of transactions in runtime systems.

With this integration, the lines between a design/analysis tool and a runtime tool start to blur and you should have a closer look how deep you want your architecture group go down the rabbit hole. Since this can be quite an effort, my recommendation is that you work with the business and IT groups that are responsible for the runtime tools and stop at providing the hook for the workflow component, but not operating it.

Hosting and cost

Most Process Mining tools are now “cloud only” and this seems to be the world that we are living in. Some vendors offer on-premise versions of their tools, but have a close look to see if these are the same offerings that are available as Saas versions, or are older legacy tools that most likely won’t get updates anymore.

When it comes to licensing, there is not “standard” pricing between vendors, but the components of the price tag are similiar:

- A base fee for providing the process mining tool. This typically includes the CSV upload and some base storage and/or number of cases. Please note, that typically this fee will be calculated for one (1) process that shall be mined, independent of the number of applications you pull data from.

That means that you should have a look at your End-to-End process structure and see how many of those will be candidates for process mining. Since the overall price tag can grow quickly, it is worth revisiting this. - Storage or number of cases – those numbers can start from 250,000 cases being included in the base fee, up to hundreds of millions for a 4XL package.

- Data extraction: depending on the type of integration (specific extractors or [cloud] integration tools with more flexibility), consumption fees might occur. While the extractors might be included for free, cloud integration components typically come with a consumption price tag (number of cases, most likely).

- User subscription fees are the last category of costs that you might encounter. Typically you see relatively cheap viewer roles, business analyst, and technical engineer roles being defined that might be needed for the different types of integrations vs. just the base tool.

- Solution costs – as discussed above, some process mining tools allow to pre-package analysis for instant use. Those might come with a price tag if they are not included in the base tool and will be provided by consultants or other members in the tool ecosystem.

It is worth the time to have a close look at what your needs and data volume are, as well as how the package from the vendor might look like at the beginning of your negotiations. Keep in mind that you most likely will have additional consulting costs, especially when you are new to this whole topic and you don’t have an infrastructure established (and now take a deep breath and get familiar with an initial price tag of $40-100k for your first process ;-)).

Roland Woldt is a well-rounded executive with 25+ years of Business Transformation consulting and software development/system implementation experience, in addition to leadership positions within the German Armed Forces (11 years).

He has worked as Team Lead, Engagement/Program Manager, and Enterprise/Solution Architect for many projects. Within these projects, he was responsible for the full project life cycle, from shaping a solution and selling it, to setting up a methodological approach through design, implementation, and testing, up to the rollout of solutions.

In addition to this, Roland has managed consulting offerings during their lifecycle from the definition, delivery to update, and had revenue responsibility for them.

Roland has had many roles: VP of Global Consulting at iGrafx, Head of Software AG’s Global Process Mining CoE, Director in KPMG’s Advisory (running the EA offering for the US firm), and other leadership positions at Software AG/IDS Scheer and Accenture. Before that, he served as an active-duty and reserve officer in the German Armed Forces.