How to Ensure Success with Process Mining: The Six Steps Every Company Should Follow

By Roland Woldt

Over the past few years, there’s been a lot of hype about process mining. Businesses across the globe are adopting mining tools to take advantage of data-driven insights that allow them to streamline their business operations. They do this to save time and reduce costs, helping companies achieve strategic goals and maintain a competitive advantage.

For many of these businesses, the idea they’re sold on is to go big. More is better, right? Many believe that the more systems and processes a mining tool is plugged into from the start, the better the eventual result.

It’s a Big Bang approach to process mining that makes sense on the surface. But for most companies, this approach fails to deliver. Instead, their process mining project drags on forever, racking up equal measures of cost and disappointment.

By the end of the process, these businesses may be left disillusioned — wondering what went wrong and what they paid for. They may abandon process mining altogether at this stage, labeling it a failed venture.

Unfortunately, those companies lose out on the real and transformative value of a process mining initiative. This is value that they could have achieved dozens of times over if they had just started a little bit smaller.

Starting small

Pick a single process, prove the value, and move on to your next process. It’s worth assigning a short time frame to each project at this stage as well so that it doesn’t drag on too long and you can then iterate on the results.

This “start small” approach builds momentum. And what many companies need to realize is that momentum is a deliverable.

In other words, when people start seeing results, they become excited about what process mining offers. It then becomes more likely that mining initiatives will be applied to other processes and systems, building value for the company with every step.

Another common mistake is to start with the biggest process problems. It seems intuitive, but going big is not necessarily the best approach.

Big takes time, and the idea is to start seeing benefits immediately. So, it’s far better to start with something small and solvable but still impactful, and to limit the initial data used in a project to the essentials of what’s needed.

So, how do you do that? How do you limit the scope of your initial foray into process mining and maximize the chances of a mining initiative bringing real value to your business?

It’s simple. You break it down into six essential steps.

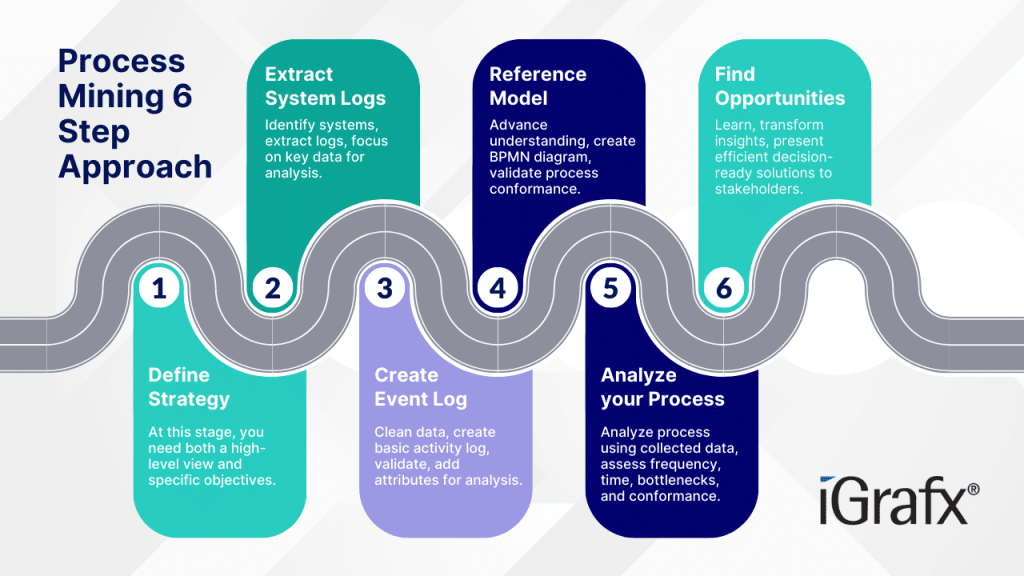

The Six-Step Process Mining Project Approach

To maximize the chance of success, you must start with a clear goal and time frame in mind and build iteration into the project right off the bat. These concepts are summarized in the figure below:

Now, let’s go through what all of this means in practice.

Step 1: Define your strategy

Why are you undertaking a process mining initiative in the first place? You may want to improve operational efficiency. But what does that mean for your company? What does a good process look like? And which process are you targeting?

You may want to break this down even further, by dividing a macro process (like source-to-pay) into smaller chunks. So your focus might be to improve a sub-process of source-to-pay, like accounts payable.

Once you’ve defined these points, the next step is to determine your hypotheses.

For example: “Our internal incident management process isn’t running smoothly, and a large percentage of incidents seem to be getting escalated. It might be because of poor quality equipment or it may be region dependent.”

At this stage, you need both a high-level view and specific objectives. These objectives will guide you in the next steps of your mining project, and you’ll return to them when determining its success.

Step 2: Extract system logs

Step two begins with identifying the systems you must examine to answer your hypotheses. You should, at this stage, have a “napkin-level” understanding of your process (i.e., a “boxes and arrows” outline).

Once you’ve identified the systems involved, it’s time to extract system logs for use in the next stage. Again, remember to keep things simple.

The core of what you need is three pieces of data: a case ID (that allows you to trace steps through the process and across systems), steps (activity names), and time stamps (when does each activity happen and how long does it take?). You don’t need to extract every additional attribute related to your cases in the initial stages.

At this stage, it may be tempting to connect your process mining tool directly to systems to extract data in near-real time. But while that does have tremendous value in the long run, for the purposes of getting your initial (small, focused) process mining project off the ground, it also adds unnecessary complexity and time. Consider it a natural expansion opportunity once you’ve delivered results.

Step 3: Create event log

With the raw system data in hand, creating a basic data model is possible. This involves

three steps:

- Ensure your data is “clean.” That means checking formatting (for example, of

dates and times) and removing entries with missing data. - Create a minimum viable activity log. This is the simplest version of your event

(case ID, activity names, and time stamps). You should plug this basic model into

your mining tool to see if it resembles the process you’re investigating. - Now, decide which attributes to add to answer your hypotheses. Going back to

the original example of incident management, perhaps you need to know equipment

brands, or which offices and regions each of your incidents comes from.

Importantly, at this stage, you should also validate your data. This means having the

process experts in your team look over the data to see if it matches what’s in the

systems. This ensures that your later conclusions are based on data that everyone has

agreed is correct, even if they don’t particularly like what the revealed process looks

like.

Step 4: Create Reference Model

Now it’s time to move beyond the napkin-level understanding and get to the nuts and bolts of how your process is meant to work.

This means creating a reference model, a BPMN diagram with your full process’s roles, steps, and logic. Essentially, you’re answering the question: “How should this process run?”

The answer to that may just be a basic structure for your process. Or it might have to do with whether that process meets SLA targets or ISO regulations, or one of a million other things. This reference model now serves as an additional data input for your analysis and will help you to check conformance, i.e., does your actual process work the way the ideal version says it should? Or are your people and systems doing something vastly different?

It’s worth noting that this step may not always be necessary (or even useful), depending on your context and hyptheses. If, for example, you’re trying to determine why incidents are taking so long to resolve, you may not need to check for conformance, whereas for compliance issues you would almost certainly need to.

Ideally though, this step will give you an understanding of how a process should work that can be compared to how it is working, which was established based onthe data model you created in Step 3.

Step 5: Analyze your process

Using the information you’ve collected in the previous steps, you can finally start to analyze your process. A lot can go into this stage, and the factors analyzed tie back to your original hypotheses and objectives. Some general considerations at this stage are:

Frequency: How often does each process pathway occur? If, for example, only 5% of your processes are going awry, that may not be a big issue. But if it’s 85%? Now there’s a much larger problem, and a potential huge opportunity for adding back value.

Time: Which steps are taking the longest? How long does it take to get from Step A to Step B?

Bottlenecks: Where is your process getting stuck? And what does that mean for subsequent steps?

Conformance: Is your process running as it was designed? Are teams applying best practices? Is the process being executed in compliance with with internal policy and external regulation?

A good tool allows you to visualize these factors, to see exactly where issues are occurring, and to present that data in a way that any stakeholder can easily understand.

This is, therefore, an opportunity to present the analysis to relevant stakeholders within the company. It’s also an opportunity to determine whether the analysis should proceed in the same way or whether it’s time to refocus or change your hypotheses. This might mean going back to your data model, or you may need to revisit your systems and logs to extract the data required to answer new questions.

The key is that it’s iterative. And the shorter time frame associated with initially tackling smaller projects makes figuring out what to focus on far easier.

Step 6: Find opportunities

Finally, take what’s been learned — the how, what, and why of your process — and transform that information into process improvements. This step loops right back to your original question: What does a good process look like? And what are the factors that are holding it back?

Answering those questions will help you decide what the company’s next steps should be. Depending on your context, that may mean automating certain parts of your process. Or adding employees to address a bottleneck.

The point is that by tackling a smaller project, you’ve found potential solutions to problems quickly, instead of the traditional approach of spending months (or even years) and hundreds and thousands of dollars before you might find opportunities to drive business value.

When presenting solutions to stakeholders, ensure they’re in a “decision-ready format” that shows the options and tradeoffs (“if you improve in times or capacity then your cost might go up”) and what your recommendations are (“you can do A, B, or C, but I recommend A because it provides the highest return on investment”).

Transforming process into advantage

Over time, the value captured by leveraging this six-step approach over multiple projects accumulates into something much bigger: a transformed process landscape and an interconnected set of systems that function optimally.

Adding in the process design and simulation capabilities offered by the iGrafx Process360 Live platform, including the ability to simulate how proposed process changes will perform in the future, it is possible to create optimized and ideal versions of each analyzed process. Versions that deliver results and a real return on investment.

Getting there, however, isn’t a case of throwing everything at the wall to see what sticks. It’s about making a small and impactful start that will snowball into the kind of process excellence that transforms your processes into a real business advantage.

(This is a repost from iGrafx’ blog found here: https://www.igrafx.com/blog/how-to-ensure-success-with-process-mining-the-six-steps-every-company-should-follow/)

Roland Woldt is a well-rounded executive with 25+ years of Business Transformation consulting and software development/system implementation experience, in addition to leadership positions within the German Armed Forces (11 years).

He has worked as Team Lead, Engagement/Program Manager, and Enterprise/Solution Architect for many projects. Within these projects, he was responsible for the full project life cycle, from shaping a solution and selling it, to setting up a methodological approach through design, implementation, and testing, up to the rollout of solutions.

In addition to this, Roland has managed consulting offerings during their lifecycle from the definition, delivery to update, and had revenue responsibility for them.

Roland has had many roles: VP of Global Consulting at iGrafx, Head of Software AG’s Global Process Mining CoE, Director in KPMG’s Advisory (running the EA offering for the US firm), and other leadership positions at Software AG/IDS Scheer and Accenture. Before that, he served as an active-duty and reserve officer in the German Armed Forces.